A Day in the Life of a Data Scientist with Teradata Vantage

Sri Raghavan discusses why Teradata Vantage is is the perfect platform for data scientists.

How is this for a bold statement? Data scientists, or so it seems, are the lifeblood of the analytics universe. Having said that and opening myself to a world of justifiable lobbing of brickbats, let me deconstruct my hyperbole and say data scientists come in many different types. The types include your statistically inclined data scientists, the ones that tends towards the business use cases, the ones who delve deep into visual depictions of the data, the ones who use mathematical foundations to develop analytic frameworks, the ones who implement algorithms using Python or R, and of course the catch all group that includes just about every other kind!

While the point is not to polemicize about who or what makes a data scientist, the point ought to be how we are able to make their lives easier. Every analytics persona has or should have the same goal: How do we ensure that the organization we work for is better able to achieve significant business outcomes? It is not about the analytics itself, although it does play a large role in their day-to-day life. It is about how to deliver a set of answers and investing in the ability to deliver such answers that is the crux of the point.

Which brings me around, rather elliptically, to the question of Teradata Vantage (henceforth, Vantage). The point of this essay/blog (or to loosely paraphrase John Lennon’s self-professed electronic sound as electronic words) is to make the case that Vantage is the perfect analytic platform that enables a data scientist to facilely get on with his or her work and get on with it in a manner that is maximally advantageous to his or her organization.

Vantage is software that comes with its own integrated analytic functions that can be executed across preferred data science languages and tools across multiple platforms (e.g., on-premises, cloud) and by diverse analytics personas on any data type at scale.

Highlights of Vantage include:

While the point is not to polemicize about who or what makes a data scientist, the point ought to be how we are able to make their lives easier. Every analytics persona has or should have the same goal: How do we ensure that the organization we work for is better able to achieve significant business outcomes? It is not about the analytics itself, although it does play a large role in their day-to-day life. It is about how to deliver a set of answers and investing in the ability to deliver such answers that is the crux of the point.

Which brings me around, rather elliptically, to the question of Teradata Vantage (henceforth, Vantage). The point of this essay/blog (or to loosely paraphrase John Lennon’s self-professed electronic sound as electronic words) is to make the case that Vantage is the perfect analytic platform that enables a data scientist to facilely get on with his or her work and get on with it in a manner that is maximally advantageous to his or her organization.

Vantage, a quick overview

First of all, as a refresher, let’s do a recap of Vantage:Vantage is software that comes with its own integrated analytic functions that can be executed across preferred data science languages and tools across multiple platforms (e.g., on-premises, cloud) and by diverse analytics personas on any data type at scale.

Highlights of Vantage include:

- Pre-built SQL, Machine Learning and Graph Engines that come pre-packaged with multi-genre analytic functions/algorithms (e.g., Statistics, Machine Learning, Text & Sentiment Extraction, Graph Centrality, Decision Trees, Dataprep, Geospatial & Temporal).

- Embedded persistent MPP storage (Teradata Database) and a high-speed data fabric that ensures connectivity to external data sources (e.g., Hadoop, Spark, Enterprise Data Warehouses).

- Multi-form factor availability with license portability across platforms. These include Teradata optimized hardware (Intelliflex), Public Cloud (e.g., AWS, Azure), Private Cloud (Intellicloud).

- Analytic engine extensibility to include open source engines such as Tensorflow and Spark

- Choice of Data Science languages such as SQL, Python, R and tools such as Teradata SQL Studio, Jupyter Notebooks, R Studio, Teradata AppCenter and more.

- A highly scalable environment with analytic engines built in Docker containers meant for extreme flexibility and performance on any data volume – regardless of whether it is installed on the cloud or on-premises.

Every analytics persona has or should have the same goal: How do we ensure that the organization we work for is better able to achieve significant business outcomes?

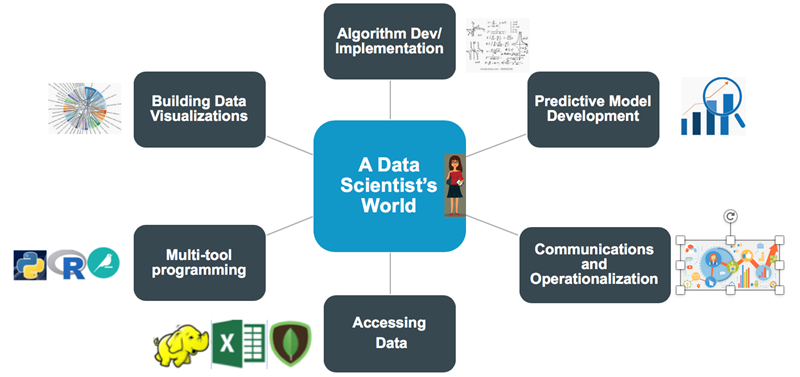

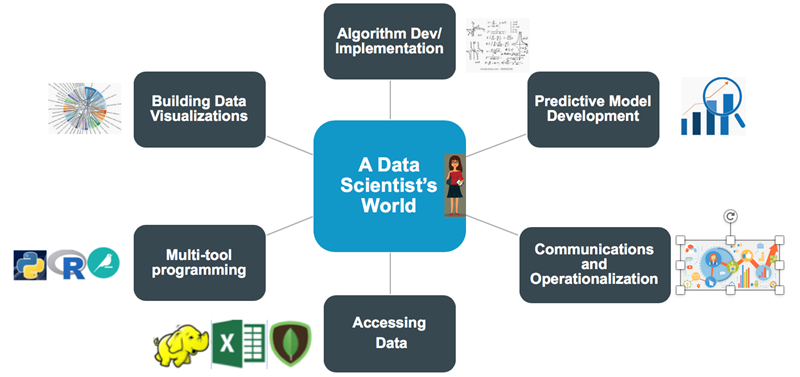

Day in the life – what does a data scientist indeed do?

Now, before the punctilious amongst us zoom in to make the point that this is not an exhaustive list (and it isn’t) it is safe to say that on any given day, a data scientist grapples with a good number of these functions.

That being the case, let’s look at the various pressures that data scientists are likely to face when trying to do all or a majority of these things:- Data Ingestion: Never has there been the case, in today’s world, that data can be ingested easily from just one source. Indeed, there is no enterprise that I have been a part of where one dataset/source takes care of all data that are recorded. Today, the proliferation of various sources, often with different data types, with a mind-bogglingly inconsistent data quality, is the norm. This implies that data scientists routinely spend a significant portion of their time grappling with getting the right data, in the right form to address their use cases.

- Analytic Languages and Tools: The days when I came of age in the analytics world where I was forced to choose between one or two languages are long past. The Ancien Régime is of course long gone, mercifully. BUT, when there is a plethora of languages and tools – R, Python, SQL, Java, (insert your own) – there is also a desire to use these languages in the analytics workflow. Data Scientists grapple with this need to use a language or tool of their fancy to address their use cases. They do it partly because it is the cool thing to do, for sure (and some call it a resumé play) but they do it also because some choices lend themselves to a quicker realization of insights. Often, they are constrained by their organizations making choices in analytic tools that often limit this flexibility.

- Algorithm Implementation: Implementing algorithms is perhaps, in my view, a central “virtue” of the data science practice. The problem is creating an algorithm (or an analytic function) is not easy and takes time. Data Scientists often deal with the painstaking and often rambling process of working through the logic of an analytic workflow that can be rewarding when it teases out insights from the data – and can be frustrating when it all needs to be reworked or needs recombination with other techniques (e.g., path analysis and machine learning classifiers). The latter is often the norm.

- Visualization and Communication: None of the work a Data Scientist does would make a lot of sense if it were articulated only in the distinct argot of that profession. Being able to visualize insights in an easy to understand manner and using a folksy vernacular to communicate said insights is an underrated task and one that carries the biggest value for money. After all, once insights are better communicated it becomes easier to operationalize them. Speaking of…

- Operationalization: This completes the holy circle of the analytic life. How many times have we seen earnest analytic exercises end up in the musty shelves of the science experiment lab never to see the light of day? According to some estimates, this could be as high as two thirds of all types of analytics work done in enterprises (no I do not have a citation because these numbers are anecdotal). Now, it is inevitable that some analytics work invariably ends in results that cannot bear any fruit for the business and hence is shelved. But, more often than not, they are shelved because there is no easy way to put them into practice – operationalization. This is how ROI is realized for analytics. Creating a model is analytics. Using the model to take actions on current customer activity is operationalization. Facilitating preferred customer behavior is what drives revenue and satisfaction (the answers), aka, operationalizing the insights.

How does Vantage fit into the data scientist life?

Not surprisingly, this is why Teradata created and delivered Vantage to the market. These pressures noted above that a data scientist routinely faces in his or her quotidian tasks are serious and ones that tend to exert a toll on his or her capability to produce impactful business outcomes. Vantage is the analytics platform that ensures:

Data connectivity ease through native data stores as well as a QueryGrid high-speed data fabric that provides connectivity to external data sources in a data lake, third party enterprise systems, and other records of choice. This makes a measurable salutary impact on the data scientist’s ability to get access to much needed data.

- The palette of analytic tools and choices that are available today in the first release of Vantage is enough to satisfy the needs of a majority of data science work. From having a wide breadth of choices in languages to client interfaces and workbenches the data scientist can now flex their creativity and showcase their innovative spirit more effectively than when they had a more constrained tool set. But, we are just getting started. Current plans call for more robust additions to this palette.

- The whole idea of delivering an analytics platform is to create the capability to implement algorithms in a multi-genre manner on all data. In this regard, the singular pressure of implementing an algorithm is significantly alleviated by a set of pre-built functions (over 180 across genres that include Time Series, Data Prep, Machine Learning, Statistics, Text & Sentiment) and natively installable python (teradataml) and R (tdplyr) libraries that cuts down all that time that it takes to develop an algorithm from scratch to a more manageable schedule.

- Pre-built visualization capabilities delivered through Teradata AppCenter and the ability to create purpose built apps has, as I have seen in numerous customer implementations, rendered a unique expansion in analytics IQ across the board. Imagine a medical professional simply clicking on an app and then seeing the results of an HL7 parser deliver clean and easy to read data? Or imagine a retailer look at a sigma chart to understand a matrix of products that are connected to each other, which enables them to deliver personalized product packages to the end customer? This ability to succinctly communicate results absolutely makes a difference in anyone’s ability to see their insights gain a wide approbation within their organization.

- Lastly, the irony is not lost on us when we see analytics professionals embrace the open source analytics revolution but stop significantly short of the operationalization mark. It is one thing to deploy cool technology to deliver analytic artistry and an entirely different thing to see insights curated as museum pieces: Something to admire from afar but not malleable to implementation. Well, we at Teradata get it. We have blended key open source technologies along with natively built capabilities on the platform such as scoring algorithms that simply consume a model (e.g., Random Forest) and score the entire database. For example, a retailer can use a Naïve Bayes Classifier model to understand churn categories and then use the Naïve Bayes Scoring function to predict the churn category of each customer in the database. How cool is this?

Who is Vantage really for?

All this said, Vantage is not just for the data scientist. Despite the fact that we use this term to indicate a laughable monolith that the profession is not, we also built Vantage precisely because not everyone is or wants to be a data scientist. There are a few others who have embraced the more populist version of this profession called citizen data scientists. Citizen data scientists, business analysts and pure business management types all have a common skein of character running through their professional work. They all want to make decisions that are driven by facts and data much like Sergeant Friday would prefer. Vantage is our effort to transform the investments in analytics that ultimately delivers answers that the business can consume, understand, and profit from. That is why we have a new tag line; one that is our creed and one that is our raison d’etre – “Invest in Answers”.

Bleiben Sie auf dem Laufenden

Abonnieren Sie den Blog von Teradata, um wöchentliche Einblicke zu erhalten